How valuable is AI in economic terms?

Some facts and thoughts on AI's coming of age

We’ve all seen the endless flood of Linkedin clickbaits announcing the end of McKinseys and Bains of the world, TCS and Infosys, etc. Just alongside that, is another lot of - automate with n8n and vibe-code almost anything you can imagine. So what happens when everyone can build whatever they want to? Economic growth? But that’s not been the case until now. Well, you’ll say it is complicated and in turn, I would agree.

Take of myself - I have been wanting to write this article for so long. I compiled my thoughts in bullet points. Put it through ChatGPT and since the past one or two odd hours, I have been revising the prompts and generating versions…none to my satisfaction. I have been circling back and forth in the same window and then decided, let me write it by myself. Is ChatGPT not useful? Swearing on Sam Altman, it is very useful. But perhaps, just for writing mundane SEO blogs, but not YET for producing high quality stuff.

Before ‘consultant killers’ come at me for saying this, Sam Altman himself agrees to this -

He says AI is good at tasks, not at jobs.

Although this statement by him is a few years old (as far as I recollect) and the models have been refined to a good degree since then, the statement still holds true. AI is good for things you can define to a granular degree or set an SOP for but not good for running end-to-end jobs because that requires some high value decision making. And there are other problems too.

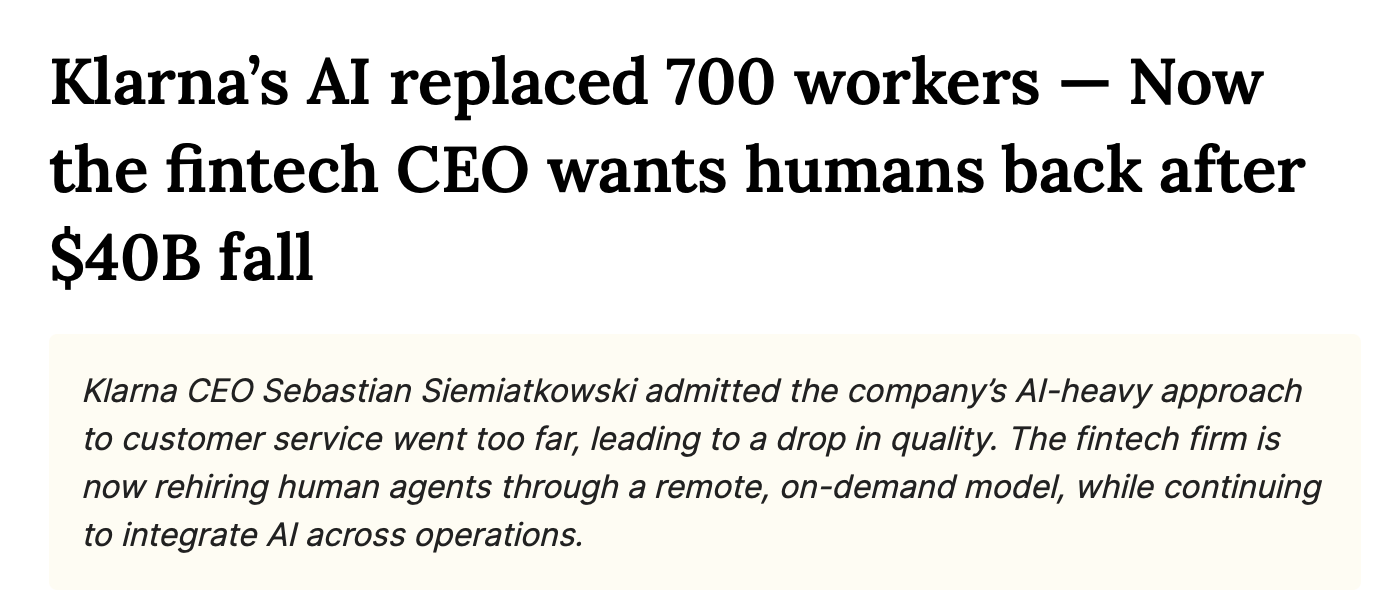

Such as this -

Same is true for vibe-coding or anything else that you can think of.

There’s a straight 15-min rant on AI coding for anyone who’d listen -

Now, my days are typically spent going back and forth with an LLM, and pretty often yelling at it, or telling it that it is doing the wrong thing and getting mad that it didn’t do what I asked it to do to begin with.

And from another post I came across -

Claude Code keeps me waiting. Here I am pressing return like a crack-addicted rodent in a lab. “Yes, I want to make this edit.” I watch as it works, glassy-eyed and bored as the code scrolls by, and on the edge of my seat because my ideas are about to become reality.

I’m guessing that part of why AI coding tools are so popular is the slot machine effect. Intermittent rewards, lots of waiting that fractures your attention, and inherent laziness keeping you trying with yet another prompt in hopes that you don’t have to actually turn on your brain after so many hours of being told not to.

The exhilarating power of creation. Just insert a few more cents, and you’ll get another shot at making your dreams a reality.

Researchers at Harvard and Stanford have come up with a term for this - ‘workslop’. They say people are using AI tools to create low-effort, passable looking work that ends up creating more work for their coworkers.

This comes after a report from MIT Media Lab concluded that despite $30 - 40 billion in enterprise investment into GenAI, 95% of organizations are getting zero return.

Mercor, working with outside experts including former Treasury Secretary Larry Summers, also released an independent benchmark (APEX-v1.0) focused on high-value knowledge jobs, such as investment banking, law, and consulting. “None of the models meet the production bar for automating real-world tasks,” emphasizing that substantial human oversight is still required for all models tested.

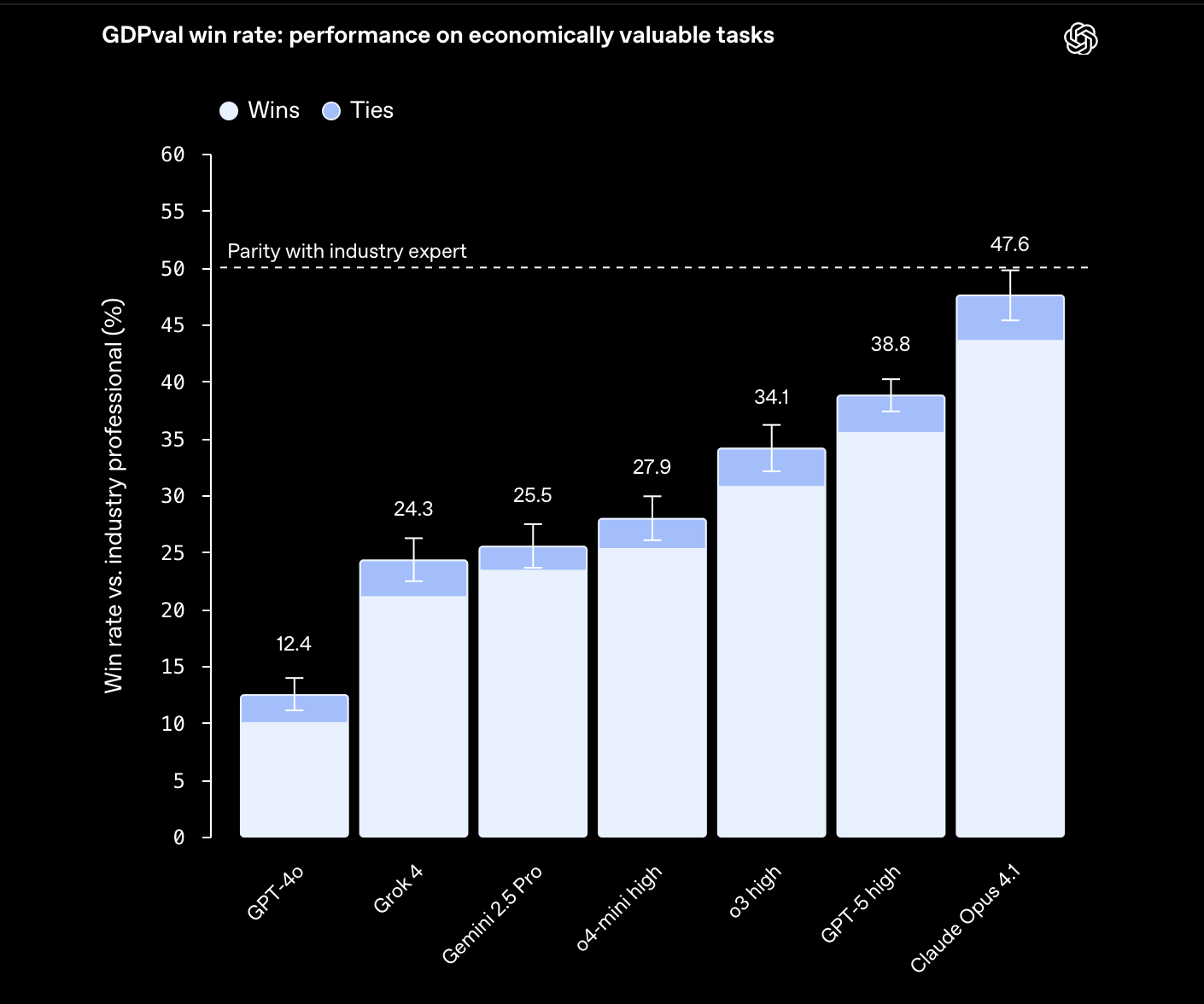

In response to this growing uncertainty, two of the foremost AI companies, OpenAI and Anthropic, have sought to validate AI’s economic promise by publicly releasing their own research and scorecards. This intends to mitigate skepticism that could make it harder for the companies to boost sales and offset their immense development costs.

In late September, OpenAI introduced GDPval, a new evaluation system developed with industry professionals. This benchmark explicitly measures how various AI models perform on “economically useful” work across 44 occupations in the top nine sectors contributing to U.S. GDP. Based on their findings, OpenAI stated that their leading AI models can rival the work of professionals and are capable of taking on some repetitive, well-specified tasks “faster and at lower cost than experts”.

At the same time, the fine print stated that these estimates of cost and time savings do not capture the human oversight, iteration, and integration steps required in real workplace settings.

OpenAI’s rival, Anthropic, published its own longitudinal study, the Anthropic Economic Index, which tracks how its Claude AI software is applied in practice among businesses. It reported that more than three quarters (77%) of companies’ usage of Claude involved automation patterns, frequently including “full task delegation”.

Despite the industry’s internal reports demonstrating capability and adoption, independent analysts continue to stress the gap between technical benchmarks and genuine economic transformation.

So, while AI companies are quantifying what their models can do, academics and independent testers are measuring what they actually deliver.

The key to bridging this gap lies in Sam Altman’s recent video which I saw on a16z’s page. He said -

If we’re right that the model capability is going to go where we think it is going to go, then the economic value that sits there can go very very far. We can see how much demand there is we can’t serve with today’s model, but we would not be going this aggressive (on infrastructure bets) if all we had was today’s model.

Meaning - there are capabilities, which once developed, will unlock massive economic gains.

Will that be in trillions? Will it be appropriate enough to justify the MASSIVE investments and costs? This is something to be checked.

Hardly a day goes by without OpenAI announcing plans to spend billions and billions. $300 billion for Oracle! $22.4 billion for CoreWeave! $10 billion for Broadcom!

And there are deals denominated in other (obscuring) currencies. They’re buying ten gigawatts from NVIDIA! Six gigawatts from AMD! 900,000 wafer starts a month from Korea! 230 Norwegian megawatts! 200 UAE megawatts!

The FT estimates they’ve “signed about $1tn in deals this year for computing power”.

What do you think?